8The Hessian

In Chapter 6 we exploited the second derivative of a one variable real function to analyze convexity along with local minima and maxima.In this chapter we introduce an analogue of the second derivative for real functions of several variables. This will be an matrix. The important notion of a matrix being positive (semi-) definite introduced in Section 3.7 will now make its appearance.8.1 Introduction

In Section 6.4 the Taylor expansion for a one variable differentiable function centered a with step size was introduced as Recall that the second derivative contains a wealth of information about the function. Especially if , then we might glean from if is a local maximum or minimum or none of these (see Theorem 6.43 and review Exercise 6.46).We also noticed that gradient descent did not work so well only descending along the gradient. We need to take the second derivative into account to get a more detailed picture of the function.8.2 Several variables

Our main character is a differentiable function in several variables. We already know that where and are vectors in (as opposed to the good old numbers in (8.1)). Take a look back at Definition 7.5 for the general definition of differentiability.We wish to have an analogue of the Taylor expansion in (8.1) for such a function of several variables. To this end we introduce the function given by Notice that where is the function given by . In particular we get by using the chain rule (see Theorem 7.24).

Explain how the chain rule is applied to get (8.3).

The derivative is also composed of several functions and again we may

compute by using the chain rule:

where is defined by

and by

The Hessian matrix of at the point

is defined by

Why is the Hessian matrix symmetric?

Suppose that is given by

Then the gradient

and the Hessian

of are computed in the Sage window below.See the further documentation for Calculus functions in Sage.

Verify (just this once) by hand the computations done by Sage in Example 8.4.Also, experiment with a few other functions in the Sage window and compute their

Hessians.

By applying Proposition 7.11 it is not too hard to see that the Hessian

matrix fits nicely into the framework above, since

The full application of the chain rule then gives

Give a detailed explanation as to why (8.5) holds.

8.3 Newton's method for finding critical points

We may use Newton's method for computing critical points for a function of several variables. Recall that a critical point is a point with . By (7.8) and (8.5) the computation in Newton's method becomes In practice the (inverse) Hessian appearing in (8.7) is often a heavy computational burden. This leads to the socalled quasi-Newton methods, where the inverse Hessian in (8.7) is replaced by other matrices.

We will return to the logistic regression in Example 7.34 about the

Challenger disaster. Here we sought to maximize the function

In order to employ Newton's method we compute the gradient and the Hessian of (8.8)

where

is the sigmoid function.Notice the potential problem in using Newton's method here: the formula for

the second order derivatives in (8.9) show that if the are just mildly big, say , then

the Hessian is extremely close to the zero matrix and therefore Sage considers it non-invertible and

(8.7) fails.In the code below we have nudged the initial vector so that it works, but you

can easily set other values and see its failure. Optimization is not just mathematics, it also calls

for some good (engineering) implementation skills (see for example details on the

quasi Newton algorithms).In the instance below we do, however, get a gradient that is practically .Code for Newton's method

8.3.1 Transforming data for better numerical performance

The numerical problems with Newton's method in Example 8.7 can be prevented by transforming the input data. It makes sense to transform data from large numbers to smaller numbers around . There is a rather standard way of doing this.Suppose in logistic regression we have a set of data associated with outcomes . Then the function from Example 7.32 becomes much more manageable if we first transform the data according to and instead optimize the function Here is the mean value and the variance of the data in (8.10).Now if and is an optimum for , then is an optimum for , since

Why is the claim/trick alluded to in (8.11) true?Below is a snippet of Sage code implementing the trick in (8.11).

The function test takes as input x0 (an initial vector like [0,0]) and

noofits (the number of iterations of Newton's method). You execute this in the Sage

window by adding for example

test([0,0], 10)

8.4 The Taylor series in several variables

Now we are in a position to state at least the first terms in the Taylor expansion for a differentiable function . The angle of the proof is to reduce to the one-dimensional case through the function defined in (8.2). Here one may prove that where with continuous at , much like in the definition of differentiability except that we also include the second derivative.Now (8.12) translates into by using (8.3) and (8.6).From (8.13) one reads the following nice criterion, which may be viewed as a several variable generalization of Theorem 6.43.

Let be a critical point for . Then

- is a local minimum if is positive definite.

- is a local maximum if is positive definite (here we call negative definite).

- is a saddle point if is indefinite.

We need to clarify two things concerning the above theorem.

- An indefinite matrix is a symmetric matrix with the property that there exists with i.e., neither nor is positive semidefinite.

-

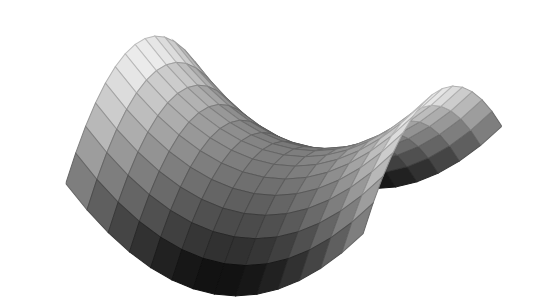

A saddle point for is defined by the existence of two vectors , such that

as illustrated in the graphics below.

Consider, with our new technology in Theorem 8.9, Exercise 7.22 once again.

Here we analyzed the point for the function

and showed (by a trick) that is neither a local maximum nor a local minimum for . The Hessian

matrix for at is

Now

Theorem 8.9(ⅲ.) shows that is a saddle point, since

and

Try plotting the graph for different values of aa=4 shows the saddle point clearly. in the Sage window in

Example 8.11. What do you observe for the point with

respect to the function? Does a have to be a number? Could it be a symbolic

expression in the variables x and y like a = -10*cos(x)*sin(y)?

Check the computation of the Hessian matrix in Example 8.11 by showing

that the Hessian matrix for at the point is

Give an example of a function having a local minimum at

, where is not positive definite.

The following exercise is a sci2u exercise from the Calculus book.

Consider the function

Compute its critical points and decide on their types according to Theorem 8.9.

Try to convince yourself that

for every .

Look at the minimization problem

subject to

where is a big number.

Give an example of a function that has a local maximum, but where

there exists with for any given (large) number .

8.5 Convex functions of several variables

Below is the generalization of Theorem 6.53 to several variables. You have already seen this in Exercise 6.54, right?

Let be a differentiable function, where

is an open convex subset. Then is convex if

and only if

for every .

Suppose that (8.14) holds and let with

, where . To prove that is convex

we must verify the inequality

Let . Then

by (8.14). If you multiply the first inequality by , the

second by and then add the two, you get (8.15).Suppose on the other hand that is a convex function. Let . Since is an open subset, it follows that for , where is

sufficiently small. Now define the function by

Being the composition of two differentiable functions, is

differentiable. Suppose that and . Then

showing that is a convex function. By Theorem 6.53,

which translates into

by using the chain rule in computing .

Prove that a bounded convex differentiable function is

constant.

The following is the generalization of Corollary 6.48.

Let be a differentiable function with continuous

second order partial derivatives, where is a

convex open subset. Then is convex if and only if the Hessian

is positive semidefinite for every . If

is positive definite for every , then is

strictly convex.

We have done all the work for a convenient reduction to the one

variable case. Suppose that is convex. Then the same reasoning

as in the proof of Theorem 8.19 shows that

is a convex function for every and every from

an open interval to for suitable

. Therefore by

Theorem 6.47. This proves that the matrix is

positive semidefinite for every . Suppose on the other hand

that is positive semidefinite for every .

Then Theorem 6.47 shows that is a convex

function from to for small

and , since

for . Therefore is a convex function, since

The same argument (using the last part of Theorem 6.47 on

strict convexity), shows that is strictly convex if

is positive definite. It follows that is strictly

convex if is positive definite for every .

Prove that

is a strictly convex function from to . Also, prove that

is a convex subset of .

Is strictly convex on some non-empty

open convex subset of the plane?

Show that given by

is a convex function. Is strictly convex?

Let be given by

where .

- Show that is a strictly convex function if and only if

and . This is a hint for the only if part. If is the Hessian for , then where - this is seen by a matrix multiplication computation. We know that is positive semidefinite. If was not positive definite, there would exist with . Now use to complete the proof that is positive definite by looking at .

- Suppose now that and . Show that has a unique global minimum and give a formula for this minimum in terms of and .

8.6 How to decide the definiteness of a matrix

In this section we will outline a straightforward method for deciding if a matrix is positive definite, positive semidefinite, negative definite or indefinite.Before proceeding it is a must that you do the following exercise.

Show that a diagonal matrix

is positive definite if and only if

and positive semidefinite

if and only if .Also, show that if is a symmetric matrix, then is

positive definite if and only if

is positive definite, where is an invertible matrix.

The crucial ingredient is the following result.

Let be a real symmetric matrix. Then there exists an

invertible matrix , such that is a diagonal matrix.

Suppose that . If has a non-zero entry in the upper

left hand corner i.e., , then

where is a real symmetric matrix and is the

invertible matrix

By induction on we may find an invertible matrix matrix such that

Putting

it follows that

We now treat the case of a zero entry in the upper left hand corner

i.e., . Suppose first that for

some . Let denote the identity matrix with the first and

-th rows interchanged. The operation amounts to

interchanging the first and -th columns in . Similarly

is interchanging that first and -th rows in .

The matrix is invertible and is a symmetric matrix

with and we have reduced to the case

of a non-zero entry in the upper left hand corner.If for every we may assume that for some . Let denote the identity matrix where

the entry in the first column and -th row is . The operation

amounts to adding the -th column to the first

column in . Similarly is adding the -th row

to the first row in . All in all we get , where we have used that for . Again we have reduced to the case of a non-zero entry in the

upper left hand corner.

Consider the real symmetric matrix.

Here . Therefore the fundamental step in the

proof of Theorem 8.27 applies and

and again

Summing up we get

You are invited to check that

Let

Here , but the diagonal element . So we are in the second step of the proof of

Theorem 8.27. Using the matrix

we get

As argued in the proof, this corresponds to interchanging the first

and third columns and then interchanging the first and third

rows. In total you move the non-zero to the upper left

corner in the matrix.

Consider the symmetric matrix

We have zero entries in the diagonal. As in the third step in the

proof of Theorem 8.27 we must find an invertible matrix

, such that the upper left corner in is

non-zero. In the proof it is used that every diagonal element is

zero: if we locate a non-zero element in the -th column in the

first row, we can add the -th column to the first column and then

the -th row to the first row obtaining a non-zero element in the

upper left corner. For above we choose and the matrix

becomes

so that

Let be any matrix. Show that

is positive semidefinite.

Find inequalities defining the set

Same question with positive semidefinite. Sketch and compare the two

subsets of the plane .

Let denote the function given by

where . Let denote the Hessian of

in a point .

- Compute .

- Show that for and .

- Compute a non-zero vector , such that in the case, where . Is invertible in this case?

- Show that is strictly convex if .

-

Is strictly convex if ?HintConsider the line segment between and a suitable vector , where .

Why is the subset given by the inequalities

a convex subset of ?